We will now configure a 2 node HA active/passive cluster running web services on Cent OS 6.5. The environment is setup in VMware vCenter.

We can see above the nodes are named Node1-CMAN and Node2-CMAN. For Node 1, there are three hard disks, hard disk 1 has OS partitions and hard disk 2 and 3 are shared storage shared with Node 2. It is important to note the SCSI controller settings for the shared disks. There are three options under SCSI Bus Sharing as None, Virtual and Physical.

- If None is selected the hard disk cannot be shared between virtual machines.

- If Virtual is selected then the hard disk can be shared among virtual machines on the same physical server.

- I have selected Physical which means the hard disk can be shared between virtual machines on any hosts.

For each shared disk, we select different Virtual Device Node for optimized IO performance. For the second shared disk I have selected "SCSI (1:0)" and for the third shared disk I have selected "SCSI (2:0)" on both VMs.

The second hard disk is the quorum "qdisk" used for quorum voting. The third hard disk is where the services that are to be made highly available will be configured.

For both the nodes two network adapters are used "VM Network" and "RH_Cluster_Lan". The first network is for the production network traffic and the second is for cluster services to communicate with each other also known as the heartbeat network.

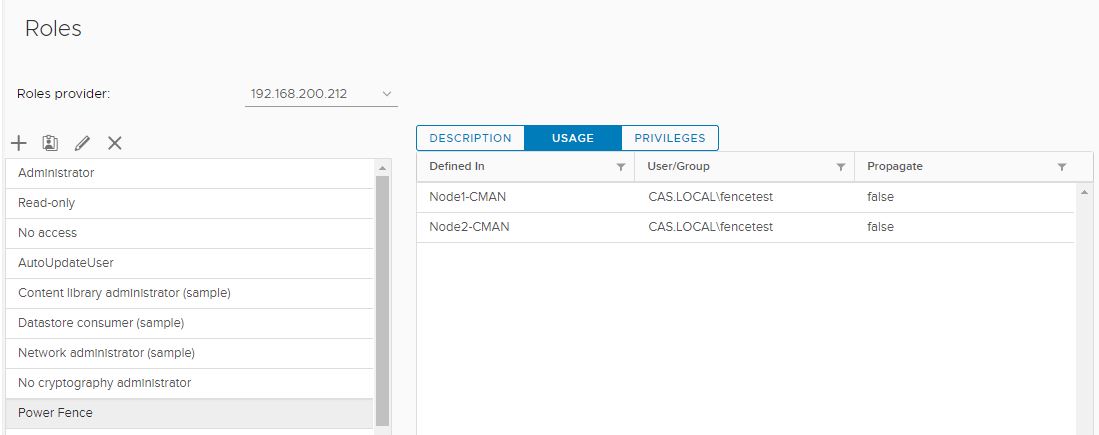

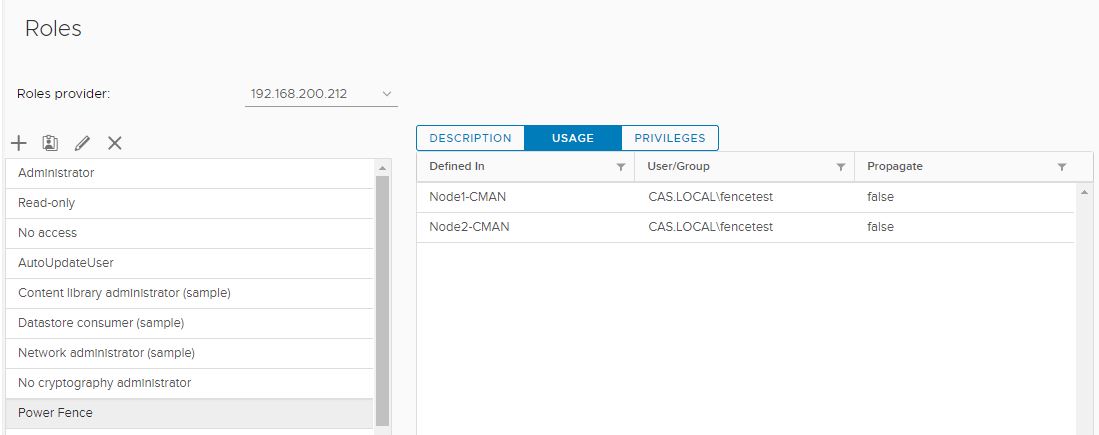

I have created a fencing user in the vCenter that has the following roles.

The roles are applicable to the following virtual machines.

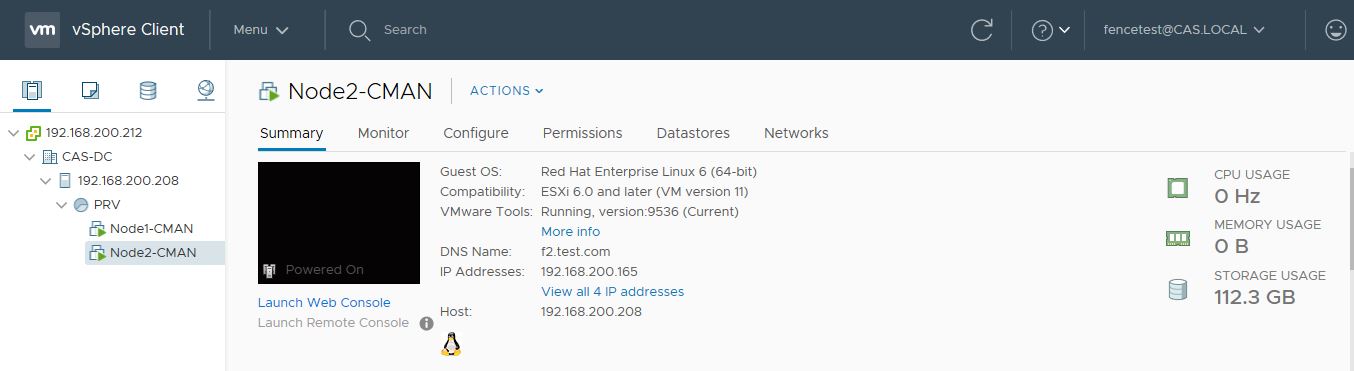

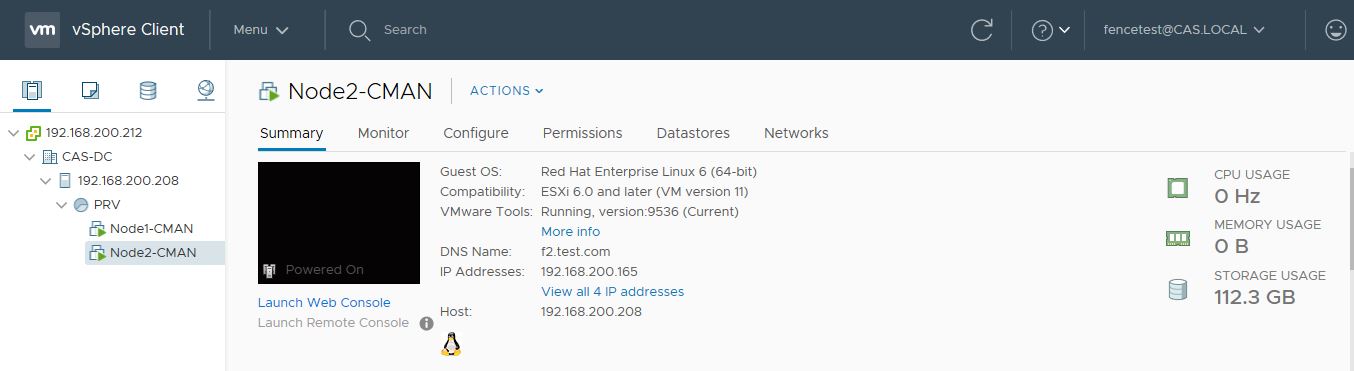

The fence user has permissions on the following virtual machines as we can see below after logging as the fence user to the vCenter.

We can check the power options available by right clicking the VM and selecting Power.

I have also disabled Network Manager, iptables, ip6tables and selinux. The services required by the cluster are already installed.

I have created a fencing user in the vCenter that has the following roles.

The roles are applicable to the following virtual machines.

The fence user has permissions on the following virtual machines as we can see below after logging as the fence user to the vCenter.

I have also disabled Network Manager, iptables, ip6tables and selinux. The services required by the cluster are already installed.

Check Block Devices

We can check the disk block devices and partitions as below.

[root@f1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 60G 0 disk

├─sda1 8:1 0 500M 0 part /boot

└─sda2 8:2 0 59.5G 0 part

├─VolGroup-lv_root (dm-0) 253:0 0 48.8G 0 lvm /

├─VolGroup-lv_swap (dm-1) 253:1 0 6G 0 lvm [SWAP]

└─VolGroup-lv_home (dm-2) 253:2 0 4.8G 0 lvm /home

sdb 8:16 0 2G 0 disk

sdc 8:32 0 16G 0 disk

sr0 11:0 1 1024M 0 rom

Configure Hosts File

The hosts file contents of both the nodes are same as below.

[root@f1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# VIP

192.168.200.177 f.cluster.com

# Cluster Internal Heartbeat IP

192.168.115.172 nodehb1.cluster.com nodehb1

192.168.115.175 nodehb2.cluster.com nodehb2

Configure Quorum Disk

Formatting the quorum disk as qdisk.

[root@f1 ~]# mkqdisk -c /dev/sdb -l clusterqdisk

mkqdisk v3.0.12.1

Writing new quorum disk label 'clusterqdisk' to /dev/sdb.

WARNING: About to destroy all data on /dev/sdb; proceed [N/y] ? y

Initializing status block for node 1...

Initializing status block for node 2...

Initializing status block for node 3...

Initializing status block for node 4...

Initializing status block for node 5...

Initializing status block for node 6...

Initializing status block for node 7...

Initializing status block for node 8...

Initializing status block for node 9...

Initializing status block for node 10...

Initializing status block for node 11...

Initializing status block for node 12...

Initializing status block for node 13...

Initializing status block for node 14...

Initializing status block for node 15...

Initializing status block for node 16...

Listing the qdisk.

[root@f1 ~]# mkqdisk -L

mkqdisk v3.0.12.1

/dev/block/8:16:

/dev/disk/by-id/scsi-36000c2992039128443a1133129efddaf:

/dev/disk/by-id/wwn-0x6000c2992039128443a1133129efddaf:

/dev/disk/by-path/pci-0000:04:00.0-scsi-0:0:0:0:

/dev/sdb:

Magic: eb7a62c2

Label: clusterqdisk

Created: Sat Sep 8 21:50:58 2018

Host: f1.cluster.com

Kernel Sector Size: 512

Recorded Sector Size: 512

Configure ricci User

Configuring the password for the ricci user on both the nodes.

[root@f1 ~]# passwd ricci

Changing password for user ricci.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

[root@f2 ~]# passwd ricci

Changing password for user ricci.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

Starting the ricci service on both the nodes.

[root@f1 ~]# service ricci start

Starting oddjobd: [ OK ]

generating SSL certificates... done

Generating NSS database... done

Starting ricci: [ OK ]

[root@f2 ~]# service ricci start

Starting oddjobd: [ OK ]

generating SSL certificates... done

Generating NSS database... done

Starting ricci: [ OK ]

Configure The Cluster

Creating the cluster named HAwebcluster.

[root@f1 ~]# ccs --host f1.cluster.com --createcluster HAwebcluster

f1.cluster.com password:

Adding nodes to the cluster.

[root@f1 ~]# ccs --host f1.cluster.com --addnode nodehb1.cluster.com --nodeid="1"

Node nodehb1.cluster.com added.

[root@f1 ~]# ccs --host f1.cluster.com --addnode nodehb2.cluster.com --nodeid="2"

Node nodehb2.cluster.com added.

Syncing the cluster configuration to node 2.

[root@f1 ~]# ccs -h f1.cluster.com --sync --activate

node2.cluster.com password:

Configure Fencing Agent

Listing the fencing agents. I have omitted all other options as we will be using fence_vmware_soap on vCenter.

[root@f1 ~]# ccs -h localhost --lsfenceopts

fence_vmware_soap - Fence agent for VMWare over SOAP API

Checking the options required for fence_vmware_soap agent.

[root@f1 ~]# ccs -h localhost --lsfenceopts fence_vmware_soap

fence_vmware_soap - Fence agent for VMWare over SOAP API

Required Options:

Optional Options:

option: No description available

action: Fencing Action

ipaddr: IP Address or Hostname

login: Login Name

passwd: Login password or passphrase

passwd_script: Script to retrieve password

ssl: SSL connection

port: Physical plug number or name of virtual machine

uuid: The UUID of the virtual machine to fence.

ipport: TCP port to use for connection with device

verbose: Verbose mode

debug: Write debug information to given file

version: Display version information and exit

help: Display help and exit

separator: Separator for CSV created by operation list

power_timeout: Test X seconds for status change after ON/OFF

shell_timeout: Wait X seconds for cmd prompt after issuing command

login_timeout: Wait X seconds for cmd prompt after login

power_wait: Wait X seconds after issuing ON/OFF

delay: Wait X seconds before fencing is started

retry_on: Count of attempts to retry power on

Adding the fence device named vmwaresoap.

[root@f1 ~]# ccs --host f1.cluster.com --addfencedev vmwaresoap agent=fence_vmware_soap login='fencetest@cas.local' passwd='FenceTest@123' power_wait=60 ipaddr=192.168.200.212

We then add the soap method to both the nodes.

[root@f1 ~]# ccs -h f1.cluster.com --addmethod soap nodehb1.cluster.com

Method soap added to nodehb1.cluster.com.

[root@f1 ~]# ccs -h f1.cluster.com --addmethod soap nodehb2.cluster.com

Method soap added to nodehb2.cluster.com.

We can obtain both the VM name and UUID by using fence_vmware_soap list option.

[root@f2 ~]# fence_vmware_soap -z -l 'fencetest@cas.local' -p 'FenceTest@123' -a 192.168.200.212 -o list

Node2-CMAN,564d2dd9-e637-651e-d4e2-b0f484fb3772

Node1-CMAN,564dd8c1-4f16-fb09-eb8d-ac6dd7d1e806

We then use the VM name and UUID to add the fence instance. We have setup the action to reboot.

[root@f1 ~]# ccs -h f1.cluster.com --addfenceinst vmwaresoap nodehb1.cluster.com soap action="reboot" port='Node1-CMAN' ssl="on" uuid='564dd8c1-4f16-fb09-eb8d-ac6dd7d1e806'

[root@f1 ~]# ccs -h f1.cluster.com --addfenceinst vmwaresoap nodehb2.cluster.com soap action="reboot" port='Node2-CMAN' ssl="on" uuid='564d2dd9-e637-651e-d4e2-b0f484fb3772'

The following command updates the cluster.conf file on the second node.

[root@f1 ~]# ccs -h f1.cluster.com --sync --activate

We add the quorum disk configuration as below for /dev/sdb.

[root@f1 ~]# ccs -h f1.cluster.com --setquorumd device=/dev/sdb

[root@f1 ~]# ccs -h f2.cluster.com --setquorumd device=/dev/sdb

We then syncronise the configuration.

[root@f1 ~]# ccs -h localhost --sync --activate

Start The Cluster Services

Now, we start the cman service successfully.

[root@f1 log]# service cman start

Starting cluster:

Checking if cluster has been disabled at boot... [ OK ]

Checking Network Manager... [ OK ]

Global setup... [ OK ]

Loading kernel modules... [ OK ]

Mounting configfs... [ OK ]

Starting cman... [ OK ]

Starting qdiskd... [ OK ]

Waiting for quorum... [ OK ]

Starting fenced... [ OK ]

Starting dlm_controld... [ OK ]

Tuning DLM kernel config... [ OK ]

Starting gfs_controld... [ OK ]

Unfencing self... [ OK ]

Joining fence domain... [ OK ]

[root@f2 ~]# service cman start

Starting cluster:

Checking if cluster has been disabled at boot... [ OK ]

Checking Network Manager... [ OK ]

Global setup... [ OK ]

Loading kernel modules... [ OK ]

Mounting configfs... [ OK ]

Starting cman... [ OK ]

Starting qdiskd... [ OK ]

Waiting for quorum... [ OK ]

Starting fenced... [ OK ]

Starting dlm_controld... [ OK ]

Tuning DLM kernel config... [ OK ]

Starting gfs_controld... [ OK ]

Unfencing self... [ OK ]

Joining fence domain... [ OK ]

Then, we start rgmanager service (Resource Group Manager).

[root@f1 ~]# service rgmanager start

Starting Cluster Service Manager: [ OK ]

[root@f2 ~]# service rgmanager start

Starting Cluster Service Manager: [ OK ]

Making the services run on startup.

[root@f1 ~]# chkconfig rgmanager on

[root@f1 ~]# chkconfig ricci on

[root@f1 ~]# chkconfig cman on

[root@f2 ~]# chkconfig rgmanager on

[root@f2 ~]# chkconfig ricci on

[root@f2 ~]# chkconfig cman on

Setting the quorum disk label as clusterqdisk.

[root@f1 ~]# ccs -h f1.cluster.com --setquorumd label=clusterqdisk

Configure HA-LVM

We need to make sure the locking_type parameter is set to 1 for HA LVM.

[root@f1 ~]# grep locking_type /etc/lvm/lvm.conf |grep -v '#'

locking_type = 1

[root@f2 ~]# grep locking_type /etc/lvm/lvm.conf |grep -v '#'

locking_type = 1

Creating the lvm on the shared storage.

[root@f1 ~]# pvcreate /dev/sdc1

Physical volume "/dev/sdc1" successfully created

We need to specify -c n options to specify when creating the volume group.

[root@f1 ~]# vgcreate -c n vg_web /dev/sdc1

Volume group "vg_web" successfully created

[root@f1 ~]# lvcreate -L 10G -n lv_web vg_web

Logical volume "lv_web" created

Listing the physical volumes, volume groups and logical volumes.

[root@f1 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 VolGroup lvm2 a-- 59.51g 0

/dev/sdc1 vg_web lvm2 a-- 16.00g 6.00g

[root@f1 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

VolGroup 1 3 0 wz--n- 59.51g 0

vg_web 1 1 0 wz--n- 16.00g 6.00g

[root@f1 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_home VolGroup -wi-ao---- 4.76g

lv_root VolGroup -wi-ao---- 48.75g

lv_swap VolGroup -wi-ao---- 6.00g

lv_web vg_web -wi-a----- 10.00g

Formatting the created logical volumes with ext4 filesystem.

[root@f1 ~]# mkfs.ext4 -L ha_web /dev/vg_web/lv_web

Changing the contents of /etc/lvm/lvm.conf as follows. Only the "VolGroup" volume group will be activated by the OS and the volume group we created above will be activated by the cluster services.

[root@f1 ~]# grep volume_list /etc/lvm/lvm.conf | grep -v "#"

volume_list = [ "VolGroup", "@nodehb1.cluster.com" ]

[root@f2 ~]# grep volume_list /etc/lvm/lvm.conf | grep -v "#"

volume_list = [ "VolGroup", "@hodehb2.cluster.com" ]After taking a backup of the initramfs boot file, we make the changes above permanent on boot by using dracut command and rebooting the nodes.

[root@f1 ~]# cp -p /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).img.bak

[root@f1 ~]# dracut --hostonly --force /boot/initramfs-$(uname -r).img $(uname -r)

[root@f1 ~]# reboot

[root@f2 ~]# cp -p /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).img.bak

[root@f2 ~]# dracut --hostonly --force /boot/initramfs-$(uname -r).img $(uname -r)

[root@f2 ~]# reboot

Configure Web Server

We install Apache web server on both the nodes and create the mount point for the web services.

[root@f1 ~]# yum groupinstall "Web Server"

[root@f2 ~]# yum groupinstall "Web Server"

[root@f1 ~]# mkdir /web/www -p

[root@f2 ~]# mkdir /web/www -p

Create Resources And Service Groups

Creating the resources, service and subservices.

[root@f1 ~]# ccs --host f1.cluster.com --addresource ip address=192.168.200.177 monitor_link=on sleeptime=10

[root@f1 ~]# ccs --host f1.cluster.com --addresource lvm lv_name=lv_web name=web_HA_LVM self_fence=on vg_name=vg_web

[root@f1 ~]# ccs --host f1.cluster.com --addresource fs device=/dev/vg_web/lv_web fstype=ext4 mountpoint=/web/www/ name=web-filesystem self_fence=on

[root@f1 ~]# ccs --host f1.cluster.com --addresource script file=/etc/init.d/httpd name=apache-script

[root@f1 ~]# ccs --host f1.cluster.com --addservice web_service max_restarts=3 name=web_service recovery=restart restart_expire_time=3600

[root@f1 ~]# ccs --host f1.cluster.com --addsubservice web_service ip ref=192.168.200.177

[root@f1 ~]# ccs --host f1.cluster.com --addsubservice web_service lvm ref=web_HA_LVM

[root@f1 ~]# ccs --host f1.cluster.com --addsubservice web_service fs ref="web-filesystem"

[root@f1 ~]# ccs --host f1.cluster.com --addsubservice web_service script ref="apache-script"

Start the cluster services

Syncing the configuration on both nodes, checking the configuration and starting the cluster services.

[root@f1 ~]# ccs --host f1.cluster.com --sync --activate

[root@f1 ~]# ccs --host f1.cluster.com --checkconf

All nodes in sync.

[root@f1 ~]# ccs --host f1.cluster.com --startall

Started nodehb1.cluster.com

Started nodehb2.cluster.com

Checking the cluster status on both nodes.

[root@f2 ~]# clustat

Cluster Status for HAwebcluster @ Mon Sep 10 15:19:41 2018

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

nodehb1.cluster.com 1 Online, rgmanager

nodehb2.cluster.com 2 Online, Local, rgmanager

/dev/block/8:16 0 Online, Quorum Disk

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:web_service nodehb2.cluster.com started

[root@f1 ~]# clustat

Cluster Status for HAwebcluster @ Mon Sep 10 15:20:43 2018

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

nodehb1.cluster.com 1 Online, Local, rgmanager

nodehb2.cluster.com 2 Online, rgmanager

/dev/block/8:16 0 Online, Quorum Disk

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:web_service nodehb2.cluster.com started

Also the partitions.

[root@f2 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 48G 3.5G 43G 8% /

tmpfs 3.9G 23M 3.9G 1% /dev/shm

/dev/sda1 485M 45M 415M 10% /boot

/dev/mapper/VolGroup-lv_home 4.7G 138M 4.4G 4% /home

/dev/mapper/vg_web-lv_web 9.9G 151M 9.2G 2% /web/www

[root@f1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 48G 3.5G 43G 8% /

tmpfs 3.9G 23M 3.9G 1% /dev/shm

/dev/sda1 485M 45M 415M 10% /boot

/dev/mapper/VolGroup-lv_home 4.7G 138M 4.4G 4% /home

Cluster.conf File

Checking the final cluster configuration.

<?xml version="1.0"?>

<cluster config_version="39" name="HAwebcluster">

<clusternodes>

<clusternode name="nodehb1.cluster.com" nodeid="1">

<fence>

<method name="soap">

<device action="reboot" name="vmwaresoap" port="Node1-CMAN" ssl="on" uuid="564dd8c1-4f16-fb09-eb8d-ac6dd7d1e806"/>

</method>

</fence>

</clusternode>

<clusternode name="nodehb2.cluster.com" nodeid="2">

<fence>

<method name="soap">

<device action="reboot" name="vmwaresoap" port="Node2-CMAN" ssl="on" uuid="564d2dd9-e637-651e-d4e2-b0f484fb3772"/>

</method>

</fence>

</clusternode>

</clusternodes>

<cman expected_votes="3"/>

<rm>

<resources>

<ip address="192.168.200.177" monitor_link="on" sleeptime="10"/>

<fs device="/dev/vg_web/lv_web" fstype="ext4" mountpoint="/web/www/" name="web-filesystem" self_fence="on"/>

<script file="/etc/init.d/httpd" name="apache-script"/>

<lvm lv_name="lv_web" name="web_HA_LVM" self_fence="1" vg_name="vg_web"/>

</resources>

<service exclusive="1" max_restarts="3" name="web_service" recovery="restart" restart_expire_time="3600">

<ip ref="192.168.200.177"/>

<lvm ref="web_HA_LVM"/>

<fs ref="web-filesystem"/>

<script ref="apache-script"/>

</service>

</rm>

<quorumd label="clusterqdisk"/>

<logging debug="on"/>

<fencedevices>

<fencedevice agent="fence_vmware_soap" ipaddr="192.168.200.212" login="fencetest@cas.local" name="vmwaresoap" passwd="FenceTest@123" power_wait="60"/>

</fencedevices>

</cluster>

Testing The Cluster For Any Issues

Testing the fencing agent fence_vmware_soap, the fence_node command was not run successfully. I will further investigate the issue and update this blog.

Edit: The fencing was not successful due to a parameter missing regarding the fence device. I added the parameter ssl="on" in the fence instance for both nodes in the cluster.conf file, and the cluster fencing worked.

[root@f1 ~]# fence_vmware_soap -z -l 'fencetest@cas.local' -p 'FenceTest@123' -a 192.168.200.212 -o reboot -n Node2-CMAN

Success: Rebooted

[root@f1 ~]# fence_node -vv nodehb2.cluster.com

fence nodehb2.cluster.com dev 0.0 agent fence_vmware_soap result: error from agent

agent args: port=Node2-CMAN uuid=564d2dd9-e637-651e-d4e2-b0f484fb3772 nodename=nodehb2.cluster.com action=reboot agent=fence_vmware_soap ipaddr=192.168.200.212 login=fencetest@cas.local passwd=FenceTest@123 power_wait=60

fence nodehb2.cluster.com failed

Checking the cluster status after rebooting the second node.

[root@f2 ~]# reboot

[root@f1 ~]# clustat

Cluster Status for HAwebcluster @ Mon Sep 10 15:25:07 2018

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

nodehb1.cluster.com 1 Online, Local, rgmanager

nodehb2.cluster.com 2 Online

/dev/block/8:16 0 Online, Quorum Disk

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:web_service nodehb1.cluster.com started

Checking ping status for the floating IP, we have 4 ping loss while the services move from one node to another.

When we login using the floating IP 192.168.200.177, we get the login shell to node 1 meaning the services are running on node 1.

[root@srv ~]# ssh root@192.168.200.177

The authenticity of host '192.168.200.177 (192.168.200.177)' can't be established.

RSA key fingerprint is 08:51:b8:8b:90:50:1e:5c:a7:88:ca:ce:06:cf:4d:1b.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.200.177' (RSA) to the list of known hosts.

root@192.168.200.177's password:

Last login: Mon Sep 10 15:22:18 2018 from 192.168.200.155

[root@f1 ~]#

We will perform more tests in the third part of this series.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.